A paper write-up for "Cross-Encoder Rediscovers a Semantic Variant of BM25" by Lu, Chen, and Eickhoff. Last updated on arxiv, February 7th 2025.

We stand in awe of modern neural ranking models. Transformers like BERT, fine-tuned as cross-encoders, achieve state-of-the-art results on information retrieval leaderboards. They process a query and document together, capturing incredibly nuanced semantic relationships, far surpassing traditional methods like BM25. But how do they do it? We often treat them as powerful black boxes – feed them data, get amazing results, but shrug when asked about the internal logic. Are they truly learning fundamentally new ways to assess relevance, or are they perhaps rediscovering and refining established principles in complex, distributed ways?

A recent paper, "Cross-Encoder Rediscovers a Semantic Variant of BM25" by Lu, Chen, and Eickhoff, provide compelling evidence that a significant part of the cross-encoder's relevance computation closely mirrors the logic of good old BM25, just implemented semantically within the Transformer architecture.

As someone fascinated by both the power of modern deep learning and the elegant heuristics of classic IR, this paper is particularly satisfying.

Interpreting Neural Network Circuits

How do you peek inside the black box? The authors employed mechanistic interpretability, a field focused on reverse-engineering the specific algorithms learned by neural networks. Instead of just correlating model behavior with concepts (like probing), it aims to identify the causal mechanisms – the specific components (neurons, attention heads) and pathways ("circuits") responsible for computations.

Their primary tool was path patching. It's a refined version of activation patching (or causal mediation analysis). Imagine you have two inputs: a baseline (query, doc_b) and a perturbed version (query, doc_p) where doc_p differs minimally from doc_b in a way that isolates a specific relevance signal (e.g., adding one more query term occurrence to test TF). Path patching allows researchers to:

- Run both inputs through the model, caching internal activations.

- Identify an upstream component (e.g., an attention head in an early layer) and a downstream component (e.g., a head in a later layer, or the final output).

- Selectively run the model again on the baseline input, but "patch in" the activation only from the chosen upstream component (from the perturbed run) and only allow the chosen downstream component to recompute its output based on this patched value, freezing everything else.

- Measure the change in the final output (relevance score).

By systematically patching paths between components, they could trace how specific relevance signals flow through the network and identify which attention heads are responsible for computing or relaying different pieces of information. They used carefully constructed "diagnostic datasets" based on IR axioms (like TFC1, LNC1 – essentially, formal versions of the BM25 heuristics) to isolate the effects they wanted to trace.

The Discovery: Finding BM25 Components Inside MiniLM

Through this meticulous "detective work," the researchers uncovered a fascinating internal circuit closely resembling BM25:

- "Matching Heads" (Early Layers): Computing Semantic TF + Saturation/Length: They identified a set of attention heads (~13 of them) primarily in the early-to-mid layers. These heads perform a semantic matching function. Query tokens attend strongly to identical and semantically similar document tokens. The sum of attention values from a query token to all document tokens acts like a soft-TF score. Crucially, analyzing these heads on diagnostic datasets showed they inherently capture term saturation (repeated term occurrences yield diminishing returns in attention score) and document length normalization (longer docs increase overall attention). They termed the complex signal computed by these heads the "Matching Score."

- IDF Lives in the Embedding Matrix (Low-Rank Component): Where does IDF come from? The researchers performed Singular Value Decomposition (SVD) on the model's token embedding matrix (WE). They found that the first rank-1 component (U0) – the most dominant direction in the embedding space – was strongly negatively correlated (-71%) with the actual IDF values of words from the training corpus. This suggests the model encodes IDF information directly within this primary embedding component. They causally validated this by performing interventions: directly modifying the U0 value for specific tokens and observing the predicted change in relevance scores (Fig 8). Increasing the (negatively correlated) U0 value for a token decreased its contribution to relevance, and vice-versa, mirroring IDF's effect.

- "Contextual Query Representation Heads" (Mid-Late Layers): Distributing TF based on IDF: Before the final scoring, another set of heads (e.g., 8.10, 9.11) seems to refine the query representation. They appear to take the TF signals computed earlier and redistribute them across query tokens, strengthening the representation of higher-IDF terms.

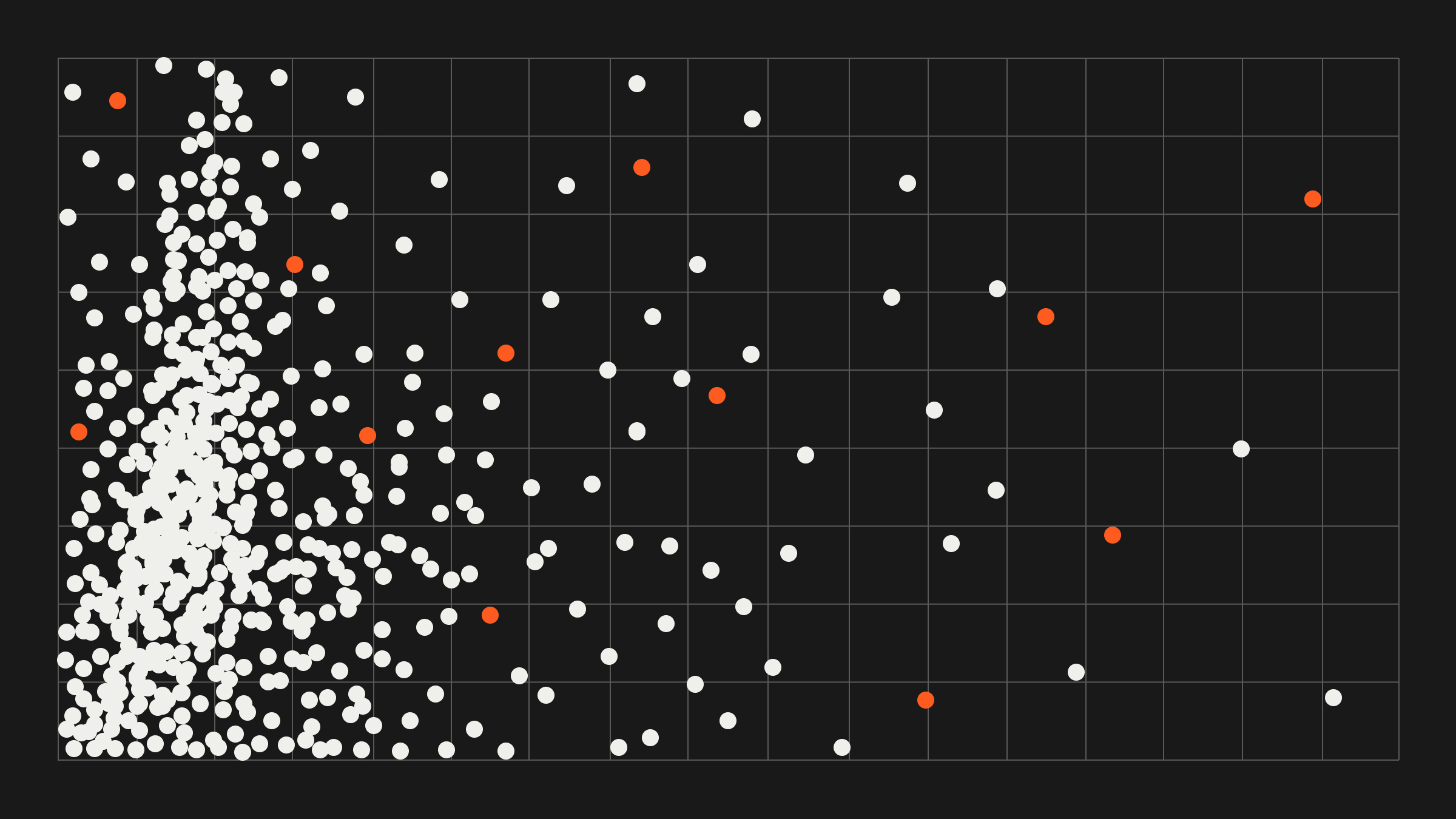

- "Relevance Scoring Heads" (Late Layers): The Final Summation: Finally, a small set of critical heads in the last layer (10.1, 10.4, 10.7, 10.10 identified via path patching to the logit) gather the processed signals. Their behavior strongly suggests they perform the final BM25-like computation: they selectively attend to query tokens based on their IDF (different heads focus on different IDF ranges, see Fig 4), retrieve the corresponding Matching Scores (soft-TF + saturation/length), and effectively compute a weighted sum, analogous to Σ IDF(qi) * TF_component(qi, d).

Essentially, the cross-encoder seems to have learned, from scratch, to implement specialized components for each part of a semantic BM25 calculation! (See the hypothesized circuit in Fig 2).

Validating the Finding

To confirm their understanding wasn't just a nice story, the authors performed a clever validation (§5). They defined a linear model (SemanticBM) whose features were exactly the components they identified: the IDF values extracted from the embedding matrix (U0) and the Matching Scores (MS) computed by the Matching Heads, plus their interaction term. They trained this linear model to predict the cross-encoder's actual relevance scores on test data.

The result? This simplified linear model achieved a high median Pearson correlation (0.84) with the full cross-encoder's scores across various datasets and query lengths. This correlation significantly surpassed that of the traditional BM25 function (0.46), showing that their identified components capture the core semantic computation of the cross-encoder much more effectively than the original lexical BM25.

What About Hybrid Search?

If cross-encoders are effectively doing semantic BM25, why is hybrid search so often state-of-the-art? Hybrid systems typically combine results from traditional BM25 (lexical matching) and dense vector retrieval (semantic matching, often using bi-encoders) before potentially reranking with a cross-encoder. If the cross-encoder already incorporates BM25 principles, why the redundancy?

This is a great question, and the answer likely lies in a few key points:

- Complementary Strengths for Recall: The first stage of search focuses on retrieving a broad set of potentially relevant candidates (high recall). Lexical BM25 excels at finding documents with exact keyword matches (important for codes, names, rare terms), while dense retrieval excels at finding conceptually similar documents even without keyword overlap. Using both often provides a richer, more diverse candidate set than either alone.

- Cross-Encoders as Powerful Rerankers: The cross-encoder is typically used in the second stage to precisely rerank the top candidates from the first stage (high precision). This paper helps explain why it's such a good reranker: its internal semantic BM25 logic allows it to effectively weigh term importance (IDF) against semantic term frequency (soft-TF) and other factors within the context of the specific query-document pair.

- Approximation, Not Identity: The linear model achieved a 0.84 correlation, not 1.0. This suggests the semantic BM25 circuit captures a core part, but not the entirety, of the cross-encoder's computation. The cross-encoder likely captures additional complex semantic interactions, real-world knowledge from pre-training, and nuances that go beyond the BM25 analogy. These extra capabilities further enhance its reranking power.

So, rather than contradicting the value of hybrid search, this paper's findings help explain the effectiveness of using cross-encoders within those hybrid systems. The cross-encoder isn't just doing BM25, but the fact that it has learned a sophisticated, semantic version of this core logic likely contributes significantly to its state-of-the-art reranking performance, complementing the strengths of the initial lexical and dense retrieval stages.

Conclusion

The idea that a state-of-the-art cross-encoder effectively rediscovers and implements a semantic version of BM25 is both surprising and deeply satisfying. It suggests that the principles underlying BM25 are perhaps more fundamental to relevance than we might have assumed, and that Transformer architectures are adept at learning these principles implicitly. This research, through elegant mechanistic interpretability, provides not just a fascinating insight but also a potential roadmap for building more transparent, controllable, and perhaps ultimately, better neural ranking models. It's a compelling piece of evidence that even inside the most complex networks, sometimes you find familiar, elegant logic at work.

I highly recommend digging into the full paper for the detailed methodology and results!

.png)