A write-up on the RecSys '24 paper, EmbSum: Leveraging the Summarization Capabilities of Large Language Models for Content-Based Recommendations collaborative study by Meta AI USA, UBC Canada, and Monash University Australia.

Item-User Profile Matching

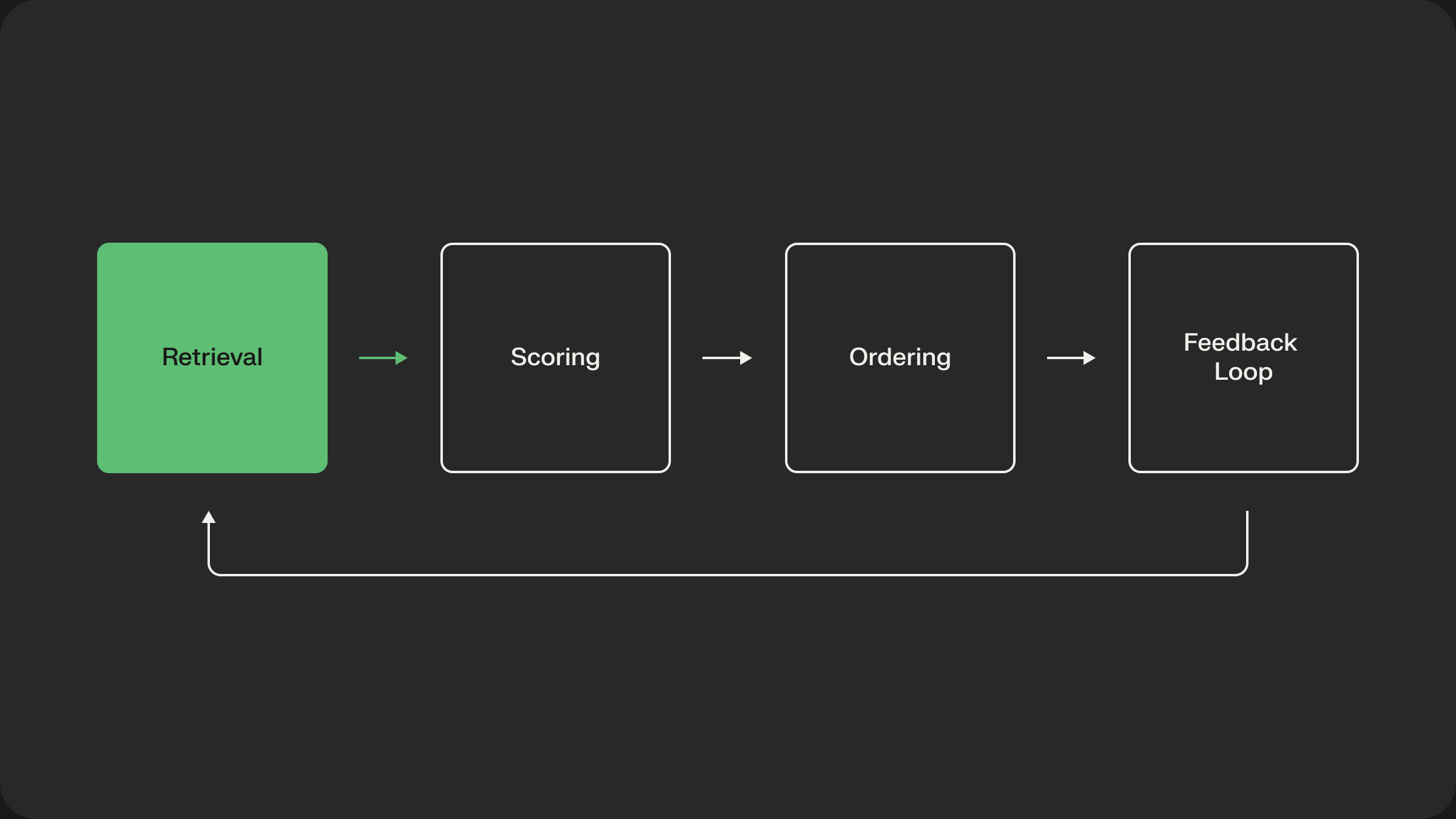

Content-based recommendations are a type of recommender system that suggests items to users based on the features of items they have previously interacted with or expressed interest in. The core components of a content-based recommender system include:

- Item analyzer: Extracts relevant features from item contents or metadata

- User profile builder: Collects and processes user preference data

- Recommendation engine: Matches user interests with item features using similarity metrics, such as cosine distance or dot product

Content-based filtering operates by analyzing the similarity between item vectors and user preference vectors, often employing machine learning algorithms to classify items as relevant or irrelevant to a specific user. This approach is particularly effective when dealing with limited user data.

Revolutionizing Content Recommendations with EmbSum

Recent advancements in recommendation systems have explored the integration of Pretrained Language Models (PLMs) to enhance content-based recommendations. Traditional methods often encoded user histories individually, limiting the ability to capture complex interactions across engagement sequences. Mao et al. introduced hierarchical encoding techniques that leveraged local and global attention to partially address this issue, though the need to truncate history sequences to 1,000 tokens limited their efficacy.

Other research focused on online real-time user modeling by integrating candidate items directly into user profiles. While this improved the alignment between user preferences and recommendations, it restricted systems from leveraging offline pre-computation for efficient inference in real-world applications.

To address these limitations, EmbSum presents an innovative framework leveraging a pretrained encoder-decoder model and poly-attention layers. This approach enables:

- User Poly-Embedding (UPE): Captures nuanced user interests by encoding long engagement histories.

- Content Poly-Embedding (CPE): Encodes candidate items with richer semantic representations for better content matching.

Supervised by Large Language Models (LLMs), EmbSum generates comprehensive user-interest summaries that improve both recommendation accuracy and system interpretability. The paper's contributions build on existing literature while offering a novel solution to optimize content recommendations in resource-constrained environments

User Poly-Embedding Techniques

User Poly-Embedding (UPE) techniques in EmbSum leverage advanced neural architectures to capture complex user preferences across multiple modalities. The framework employs a Transformer-based encoder with poly-attention layers to process diverse ID-based features, such as unique item identifiers, categories, and user ratings. These features are first mapped to unique embedding representations and then combined into single embeddings before being input to the encoder.

The key innovation lies in the use of an autoregressive transformer to predict subsequent tokens from previous ones in user activity sequences. This allows EmbSum to model temporal dependencies and evolving user interests effectively. The output embeddings from this process provide rich user context for personalized LLM responses, enabling more accurate content recommendations while maintaining computational efficiency.

Content Poly-Embedding Applications

Content Poly-Embedding (CPE) in EmbSum extends beyond traditional item representations to capture rich semantic information across diverse content types. This technique leverages pre-trained encoder-decoder models to generate embeddings that encapsulate both the surface-level features and deeper contextual meaning of items.

CPE applications include:

- Multimodal content representation: Integrating text, image, and metadata features into a unified embedding space, similar to CLIP's approach for text-image embeddings.

- Semantic similarity search: Enabling efficient retrieval of related content based on conceptual similarity rather than just keyword matching.

- Cross-domain recommendations: Facilitating transfer learning between different content domains by mapping items to a shared semantic space.

By employing poly-attention layers, CPE can dynamically weight different aspects of content based on their relevance to user preferences, enhancing the personalization of recommendations.

Key Datasets and Pioneering Models

The EmbSum framework builds upon several key datasets and models in the field of news recommendation and language model instruction tuning. The MIND dataset serves as a benchmark for news recommendation research, containing over 160,000 English news articles and 15 million user impression logs. UNBERT, MINER, and UniTRec are state-of-the-art models for news recommendation, each employing unique approaches to enhance recommendation accuracy. Self-Instruct introduces a novel method for aligning language models with self-generated instructions, potentially improving the summarization capabilities utilized in EmbSum.

LLM-Supervised Interest Summaries

EmbSum leverages large language models (LLMs) to generate and supervise user interest summaries, enhancing the system's ability to capture long-term user preferences. This approach utilizes the advanced natural language understanding and generation capabilities of LLMs to create concise, semantically rich representations of user interests.

The LLM-supervised summary generation process involves:

- Extracting key information from user engagement history

- Generating a coherent summary of user interests

- Refining the summary based on LLM feedback to ensure relevance and accuracy

By incorporating LLM-supervised interest summaries, EmbSum achieves a more comprehensive understanding of user preferences, leading to improved recommendation accuracy and personalization. This approach also aligns with recent trends in leveraging LLMs for user modeling and personalized content delivery across various domains.

Evaluation and Results

EmbSum was evaluated on MIND and Goodreads datasets, compared against state-of-the-art baselines including UNBERT, MINER, and UniTRec. Key metrics were AUC (Area Under the Curve), MRR (Mean Reciprocal Rank) and nDCG@5 and nDCG@10 (Normalized Discounted Cumulative Gain)

. Results showed:

MIND dataset:

- AUC: 71.95 (+0.22 over UNBERT)

- MRR: 38.58 (+0.48 over MINER)

- nDCG@10: 42.97 (+0.05 over UNBERT)

Goodreads dataset:

- AUC: 61.64 (+0.24 over UNBERT)

- MRR: 73.75 (+0.41 over UNBERT)

- nDCG@10: 69.08 (+0.06 over CAUM)

EmbSum achieved these results with fewer parameters (61M) compared to BERT-based methods (125M+).

EmbSum's Transformative Impact

EmbSum advances content-based recommendation systems by leveraging large language models for user interest profiling and content summarization. Key innovations include:

- Long history processing: Handles up to 7,440 tokens for MIND and 7,740 for Goodreads, outperforming previous 1,000-token limits.

- User interest summarization: Generates human-readable summaries, achieving ROUGE-L scores of 39.12 (MIND) and 28.16 (Goodreads), demonstrating its effectiveness in capturing user preferences from engagement history.

- Poly-embedding performance: Content Poly-Embedding (CPE) significantly contributes to model success.

- LLM-supervised summarization: Improves performance on both datasets.

- Robust hyperparameters: The model demonstrates stability across various settings, with AUC scores on the MIND dataset remaining within a 0.3 range (70.43 to 70.76) when adjusting key parameters.

By combining LLM-based summarization with poly-attention mechanisms, EmbSum enhances personalized content delivery, improving accuracy and interpretability. This paves the way for more sophisticated, context-aware recommendation systems.