This paper, by Bailu Ding (Microsoft) and Jiaqi Zhai (Meta), which is in the proceedings of the WWW '25 conference, proposes a novel approach called Mixture of Logits (MoL) that offers a generalized interface for learned similarity functions. It not only achieves state-of-the-art results across recommendation systems and question answering but also demonstrates significant latency improvements, potentially reshaping the landscape of vector databases.

The Problem with Dot Products and Learned Similarities

While effective, dot product similarity has inherent limitations. It assumes a linear relationship between embeddings, which might not capture the complex nuances of real-world data. Previous attempts to move beyond dot products have faced challenges:

- Computational Cost: Learned similarity functions are often computationally expensive, hindering their applicability in latency-sensitive applications.

- MIPS Compatibility: Many alternative methods don't play well with MIPS algorithms, which are highly optimized for dot product search.

- Lack of Generalization: Existing solutions lack a universal interface, making it difficult to build general-purpose retrieval systems.

Enter Mixture of Logits (MoL)

The core idea is that many advanced retrieval techniques aim for more expressive similarity calculations—moving beyond the simple linear relationship of dot products. "Expressiveness" here means capturing subtle nuances between queries and items that a dot product might miss. The paper formalizes this with 𝑝(𝑥 | 𝑞), the probability of retrieving item x given query q, which encompasses dot products as a simplified case. MoL leverages this by approximating 𝑝(𝑥 | 𝑞) as a mixture of dot products, boosting expressiveness while remaining computationally efficient.

Instead of relying on a single pair of embeddings for query and item, MoL uses P pairs of "component embeddings." It then applies adaptive gating weights 𝜋_p (between 0 and 1) to the inner product of these pairs:

This formulation is not only more expressive than a single dot product but also allows for efficient batching on GPUs. Crucially, the authors introduce a load balancing regularization loss based on mutual information. This ensures that the different component embeddings are effectively utilized during training, preventing any single pair from dominating.

Intuition behind the mutual information loss

The load balancing loss aims to ensure that all component embeddings contribute meaningfully to the similarity calculation. It leverages mutual information, a measure of the statistical dependence between two variables. In this case, the variables are the selected embedding pair p and the (query, item) pair (q, x).

The loss has two components:

- Maximize H(p): H(p) represents the entropy of the distribution over embedding pairs. Maximizing entropy encourages a uniform distribution, meaning that all embedding pairs are used roughly equally during training. This prevents any single pair from being underutilized.

- Minimize H(p|(q, x)): H(p|(q, x)) is the conditional entropy of p given (q, x). Minimizing this term encourages the selection of embedding pairs to be informative about the specific query and item. In other words, for a given (query, item) pair, the model should ideally rely on a specific subset of embedding pairs rather than using all of them uniformly.

By balancing these two forces, the load balancing loss ensures that all embedding pairs are trained effectively while also allowing the model to learn which pairs are most relevant for different (query, item) combinations.

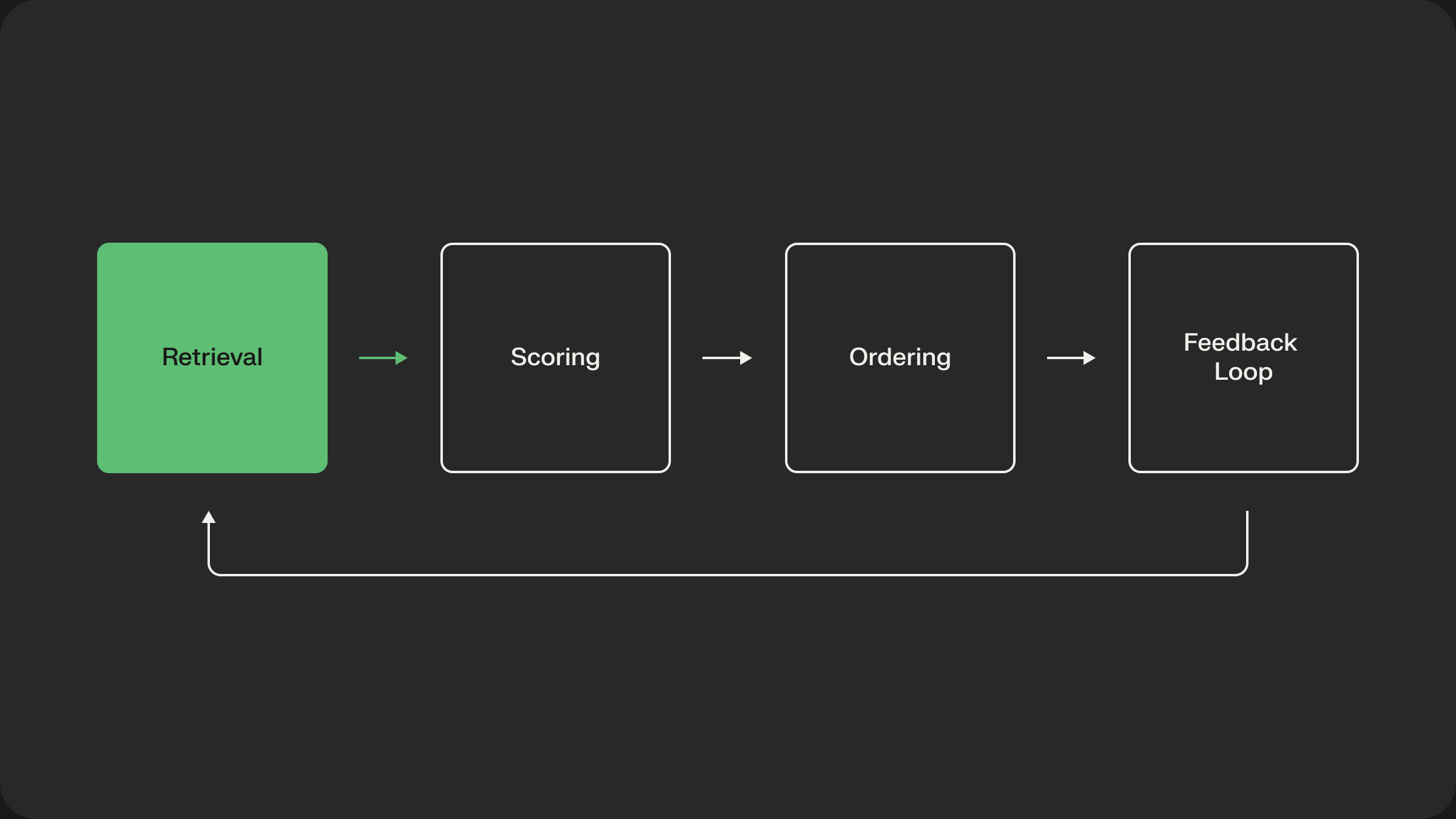

RAILS: Retrieval with Learned Similarities

The authors combine MoL with accelerated top-k retrieval algorithms to create RAILS. They propose efficient heuristics for approximate top-k retrieval, significantly reducing latency while maintaining high recall rates.

Two-stage approximate algorithm and the heuristics

The two-stage approximate top-k retrieval algorithm aims to reduce the computational cost of evaluating MoL for every item in a large dataset.

Stage 1: Candidate Retrieval This stage oversamples a set of candidate items that are likely to have high MoL scores. It uses heuristics based on dot product similarity to quickly identify these candidates.

Stage 2: MoL Evaluation The MoL score is computed only for the candidate items retrieved in Stage 1. The top-k items based on these MoL scores are then returned.

The paper proposes two heuristics for candidate retrieval:

- Top-k embedding (TopKPerEmbd): For each of the P embedding pairs, perform a standard top-k dot product search. The union of the top-k items from each embedding pair forms the candidate set.

- Top-k average (TopKAvg): Calculate the dot product for all P embedding pairs. For each item, average the P dot product scores. Then, select the top-k items based on these averaged scores.

The paper also suggests a combined top-k heuristic, which takes the union of the candidate sets generated by TopKPerEmbd and TopKAvg. This approach can further improve recall at the cost of slightly increased computation.

Impressive Empirical Results

The paper showcases MoL's effectiveness on both recommendation systems and question answering tasks:

- Recommendation Systems: Using datasets like Movielens and Amazon Books, MoL consistently outperforms state-of-the-art baselines by significant margins (e.g., 29.1% improvement in HR@1).

- Question Answering: On the Natural Questions dataset, MoL surpasses strong dense, sparse, and generative retrieval baselines, achieving a new state-of-the-art.

Rethinking Vector Databases

The results presented in this paper raise an intriguing question: will MoL and RAILS lead to a paradigm shift in how vector databases are built? Given the impressive performance gains in both accuracy and latency, the move away from MIPS-based solutions towards GPU-accelerated learned similarities seems increasingly likely.

Thoughts and Shaped's Perspective

I’m always drawn to research that generalizes existing methods and opens up new avenues for exploration. This paper beautifully demonstrates that principle. By starting with a generalized similarity function and then deriving MoL, the authors have created a powerful and flexible framework for learned retrieval.

At Shaped, we're constantly exploring new ways to optimize retrieval. We're particularly interested in the potential of accelerated hardware for inference, and MoL fits perfectly into this vision. We're excited to experiment with RAILS and see how it can enhance our platform. This work could be a game-changer for anyone working with large-scale retrieval systems.

.png)